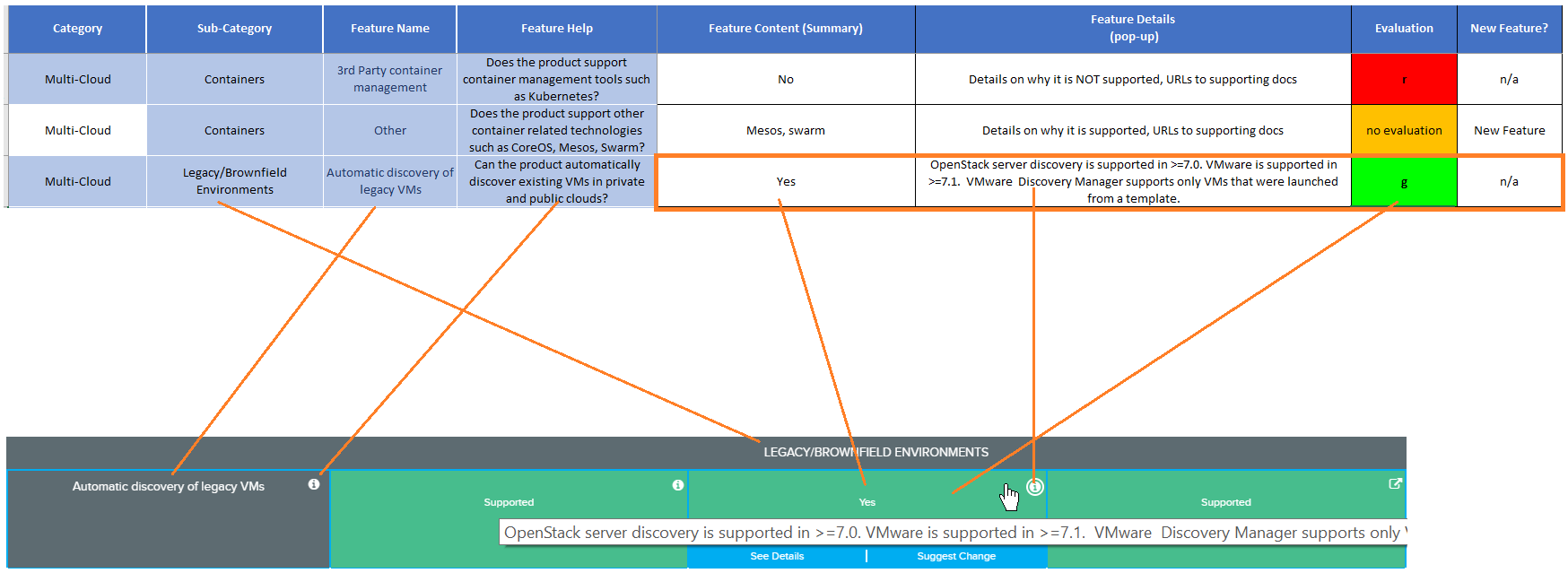

Data is collected and stored in templates. This article will provide guidance how to fill in the templates and how the data in the template maps to the comparison tables (matrix).

The first 4 columns of the Data Template reflect the structure of the comparison (taxonomy), consisting of categories, sub-categories and features (with feature help) – this is provided by WhatMatrix.

The next 4 columns must be filled in for each evaluated product.

What to “fill-in”

The data for each vendor/product is collected in columns 5,6,7,8 (repeat for every feature row)

- Feature Content: Provide a short summary of the evaluation, e.g. “Yes”, “256GB”, “Limited support” etc …

- Feature Details – Provide details on the evaluation and links to supporting documentation, help the reader understand “why” you evaluated it this way

- Evaluation – Select an evaluation color to indicate Green=Fully supported, Yellow=Supported with limitations, Red=Unsupported or Severe limitations, Gray: Information Only

- New feature? – Select “New” to highlight new features for the product release (visitors can skim just for “new” labels to see relevant updates since the last version)

Mapping of fields in the template to the matrix table

Tips:

- Features that are “information Only” (gray) do not require an evaluation (color) – all other rows MUST have an evaluation color set

- List URLs in the “Feature details” column (not the “Feature content” column)

- Provide as much detail in the “details” column as you can – especially to justify your evaluations

Evaluation (color) guidance

To evaluate – ask yourself two questions:

1. Can it “do it”? (functional)

2. How “easily” can it do it? Any Gotchas? (non-functional effort / operational gotchas)

Colors / Ratings: (consider answer to both, your functional and non-functional answer)

– Limitations should be reflected with an AMBER rating

– Unsupported / VERY limited with a RED

What are Limitations?

Again, limitations can be functional and non-functional e.g.:

- additional additional cost,

- additional implementation effort,

- maintenance effort,

- lack of automation,

- reliability, performance etc

Point out any “gotchas” or limitation that you would want to be aware of – this is value to the reader

Example Ratings

– function fully provided / no notable limitations: Green (2 points)

– function fully provided / known performance issues: Amber (1 point)

– function partially provided: Amber

– additional cost (fee-based vendor plugin): Amber

– Severe functional / operational limitations: Red

– Can’t provide the function: Red

Can I include plugins or tools the native vendor provides? (e.g. not provided with the product itself)

Yes, you can include FEE and FREE add-ons the vendor provides but consider limitations in the evaluation – e.g. additional cost or integration effort:

- free / provides full functionality / no additional integration effort: Green

- fee based / good functionality: Amber

- fee based / limited functionality: Amber – Red (if not worth it)

Can I include 3rd Party plugins or tools?

Only include FREE / open source products in evaluations (colors) – consider any limitations in the evaluation (e.g. dealing with a third party, integration effort)

Do NOT include Fee-Based 3rd party products in evaluations (exceeds scope) – feel free to list them as “FYI” or include them in the Matrix-place as “Add-on”

Please Note:

WhatMatrix can not publish direct vendor submissions – all data must be curated by the category consultant (comparison manager) and the contributing category specialists and will be subject to open community curation once published.